How to start

Authors

- Hio-Been Han, hiobeen@mit.edu

- SungJun Cho, sungjun.cho@psych.ox.ac.uk

- DaYoung Jung, dayoung@kist.re.kr

The following guide provides the instructions on how to access the dataset uploaded to the GIN G-Node repository hiobeen/Mouse-threat-and-escape-CBRAIN.

LFP (EEG) dataset

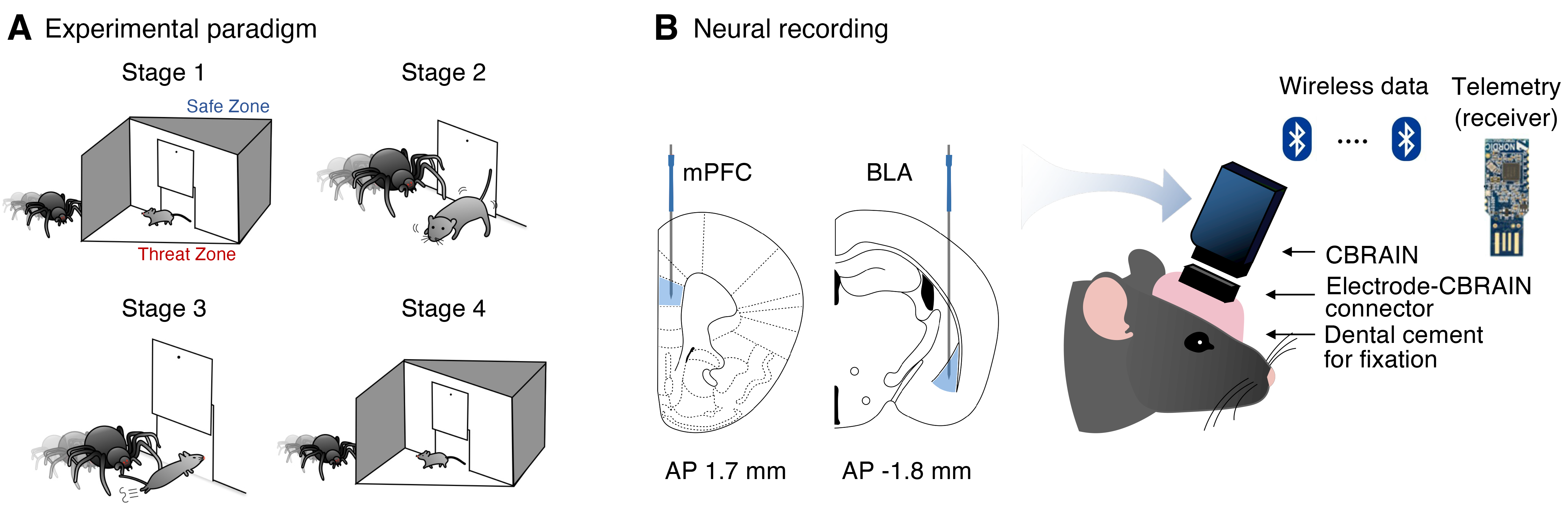

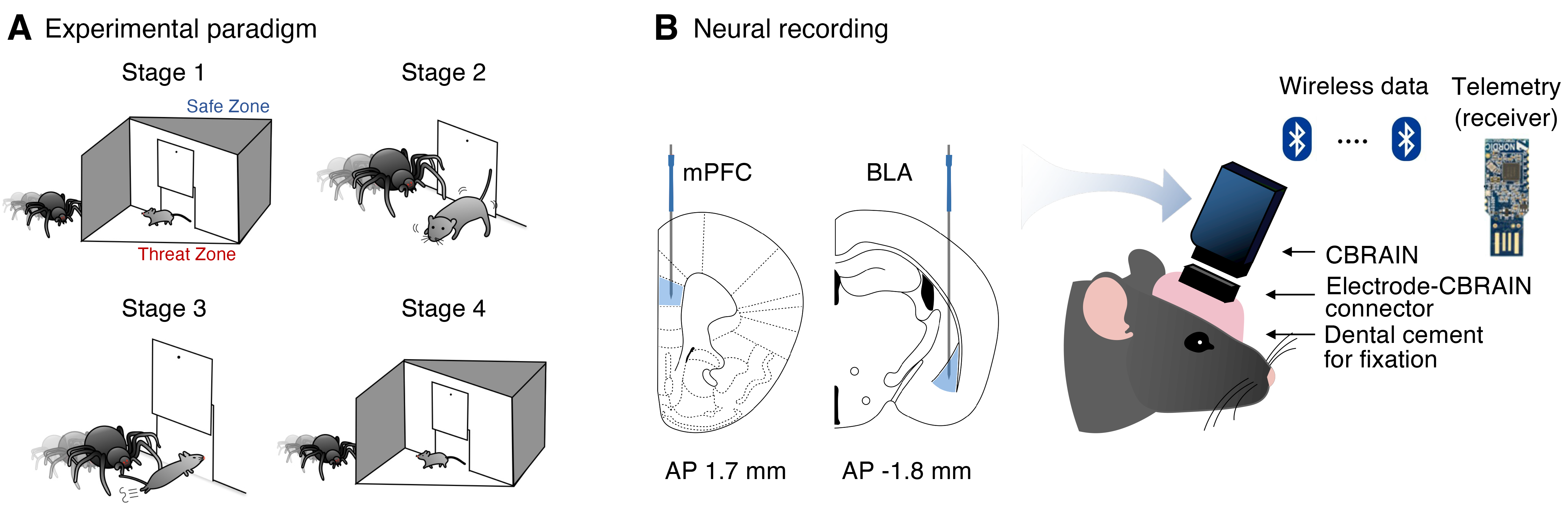

The structure of our dataset follows the BIDS-EEG format introduced by Pernet et al. (2019). Within the top-level directory data_BIDS, the LFP data are organized by the path names starting with sub-*. These LFP recordings (n = 8 mice) were recorded under the threat-and-escape experimental paradigm, which involves dynamic interactions with a spider robot (Kim et al., 2020).

This experiment was conducted in two separate conditions: (1) the solitary condition (denoted as "Single"), in which a mouse was exposed to a robot alone in the arena, and (2) the group condition (denoted as "Group"), in which a group of mice encountered a robot together. A measurement device called the CBRAIN headstage was used to record LFP data at a sampling rate of 1024 Hz. The recordings were taken from the medial prefrontal cortex (Channel 1) and the basolateral amygdala (Channel 2).

For a more comprehensive understanding of the experimental methods and procedures, please refer to Kim et al. (2020).

IMPORTANT NOTE

To ensure compatibility with EEGLAB and maintain consistency with the BIDS-EEG format, the term "EEG" is used throughout the dataset to refer to the data, although its actual measurement was recorded as LFP. Therefore, we use the term "EEG" in the guideline below for convenience.

Behavioral video & position tracking dataset

You can find videos that were simultaneously recorded during LFP measurements under the directory data_BIDS/stimuli/video. From the videos, users may extract video frames to directly compare mouse behaviors (e.g., instantaneous movement parameters) with neural activities. Additionally, we also uploaded instantaneous position coordinates of each mouse extracted from the videos. These position data are located in the directory data_BIDS/stimuli/position.

IMPORTANT NOTE

Please note that the position tracking process we employed differed across two task conditions. For the solitary condition, the position tracking was performed using the CNN-based U-Net model (Ronnenberger et al., 2015; see Han et al., 2023 for the detailed procedure). This approach tracks an object location based on the centroid of a mouse's body. For the group condition, an OpenCV-based custom-built script was used (see Kim et al., 2020 for details). This approach tracks the location of a headstage mounted on each mouse and extracts its head position.

Therefore, the datasets for two task conditions contain distinct information: body position for the solitary condition and headstage position for the group condition. Users should be aware of these differences if they intend to analyze this data.

References

- Pernet, C. R., Appelhoff, S., Gorgolewski, K. J., Flandin, G., Phillips, C., Delorme, A., Oostenveld, R. (2019). EEG-BIDS, an extension to the brain imaging data structure for electroencephalography. Scientific Data, 6(1):103.

- * Kim, J., Kim, C., Han, H. B., Cho, C. J., Yeom, W., Lee, S. Q., Choi, J. H. (2020). A bird’s-eye view of brain activity in socially interacting mice through mobile edge computing (MEC). Science Advances, 6(49):

eabb9841.

- Ronneberger, O., Fischer, P., Brox, T. (2015). U-net: Convolutional networks for biomedical image segmentation. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2015: 18th International Conference, Munich, Germany, October 5-9, 2015, Proceedings, Part III 18 (pp. 234-241). Springer International Publishing.

- * Han, H. B., Shin H. S., Jeong Y., Kim, J., Choi, J. H. (2023). Dynamic switching of neural oscillations in the prefrontal–amygdala circuit for naturalistic freeze-or-flight. Proceedings of the National Academy of Sciences, 120(37):

e230876212.

- * Cho, S., Choi, J. H. (2023). A guide towards optimal detection of transient oscillatory bursts with unknown parameters. Journal of Neural Engineering, 20:046007.

*: Publications that used this dataset.

User Guidelines

Part 0) Environment setup for eeglab extension

Since the EEG data is in the eeglab dataset format (.set/.fdt), installing the eeglab toolbox (Delorme & Makeig, 2004) is necessary to access the data. For details on the installation process, please refer to the following webpage: https://eeglab.org/others/How_to_download_EEGLAB.html.

% Adding eeglab-installed directory to MATLAB path

eeglab_dir = './subfunc/external/eeglab2023.0';

addpath(genpath(eeglab_dir));

Part 1) EEG data overview

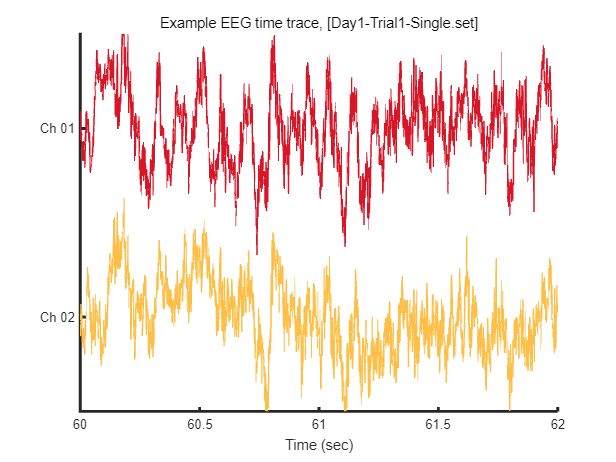

1-1) Loading an example EEG dataset

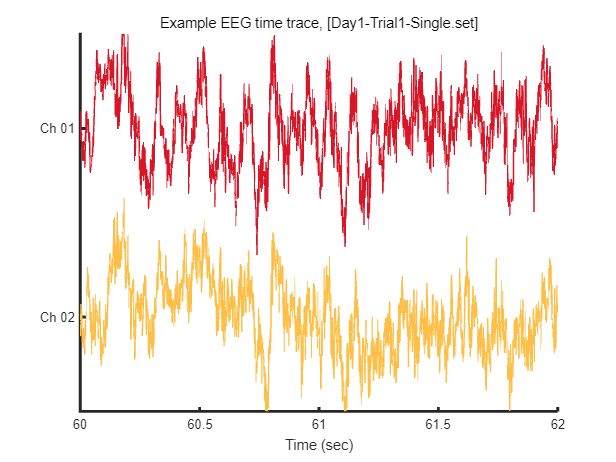

In this instance, we'll go through loading EEG data from a single session and visualizing a segment of that data. For this specific example, we'll use Mouse 1, Session 1, under the Solitary threat condition.

path_base = './data_BIDS/';

mouse = 1;

sess = 1;

eeg_data_path = sprintf('%ssub-%02d/ses-%02d/eeg/',path_base, mouse, sess);

eeg_data_name = dir([eeg_data_path '*.set']);

EEG = pop_loadset('filename', eeg_data_name.name, 'filepath', eeg_data_name.folder, 'verbose', 'off');

Reading float file 'D:\Mouse-threat-and-escape-CBRAIN\data_BIDS\sub-01\ses-01\eeg\Day1-Trial1-Single.fdt'...

After loading this data, we can visualize tiny pieces of EEG time trace (2 seconds) as follows.

win = [1:2*EEG.srate] + EEG.srate*60; % 2 seconds arbitary slicing for example

fig = figure(1); clf

plot_multichan( EEG.times(win), EEG.data(:,win));

xlabel('Time (sec)')

title(sprintf('Example EEG time trace, [%s]',eeg_data_name.name))

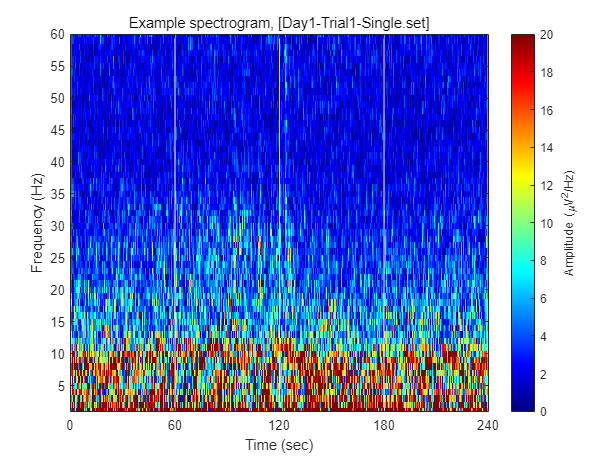

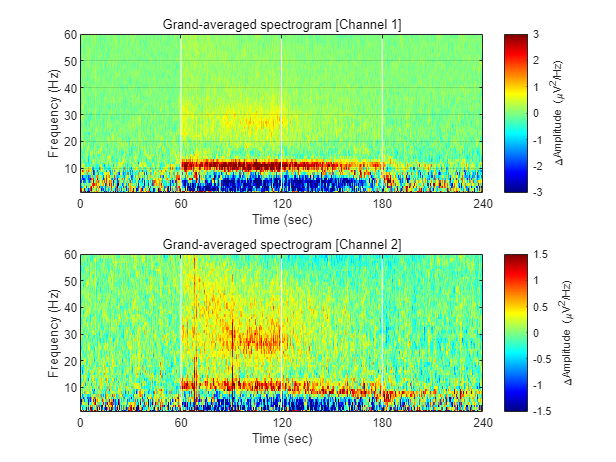

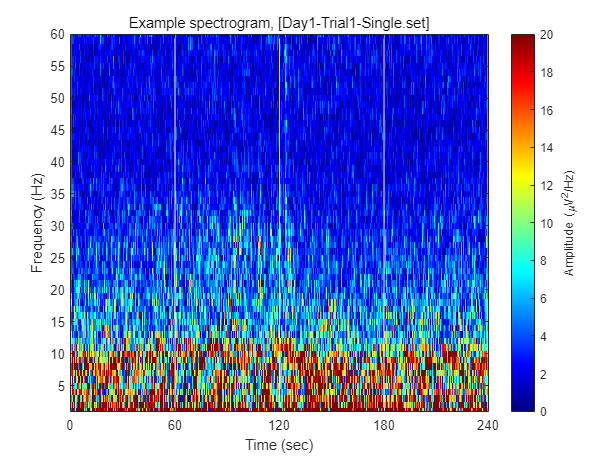

1-2) Visualizing example spectrogram

Each dataset contains 240 seconds of EEG recording, under the procedure of the threat-and-escape paradigm described in the original publication (Kim et al., 2020). To visualize an overall pattern of EEG activities in one example dataset, a spectrogram can be obtained as follows.

The threat-and-escape procedure consists of four different stages:

Stage 1: (Robot absent) Baseline (0-60 sec)

Stage 2: (Robot present) Robot attack (60-120 sec)

Stage 3: (Robot present) Gate to safezone open (120-180 sec)

Stage 4: (Robot absent) No threat (180-240 sec)

% Calculating spectrogram

ch = 1;

[spec_d, spec_t, spec_f] = get_spectrogram( EEG.data(ch,:), EEG.times );

spec_d = spec_d*1000; % Unit scaling - millivolt to microvolt

% Visualization

fig = figure(2); clf

imagesc( spec_t, spec_f, spec_d' ); axis xy

xlabel('Time (sec)'); ylabel('Frequency (Hz)');

axis([0 240 1 60])

hold on

plot([1 1]*60*1,ylim,'w-');

plot([1 1]*60*2,ylim,'w-');

plot([1 1]*60*3,ylim,'w-');

set(gca, 'XTick', [0 60 120 180 240])

colormap('jet')

cbar=colorbar; ylabel(cbar, 'Amplitude (\muV^2/Hz)')

caxis([0 20])

title(sprintf('Example spectrogram, [%s]',eeg_data_name.name))

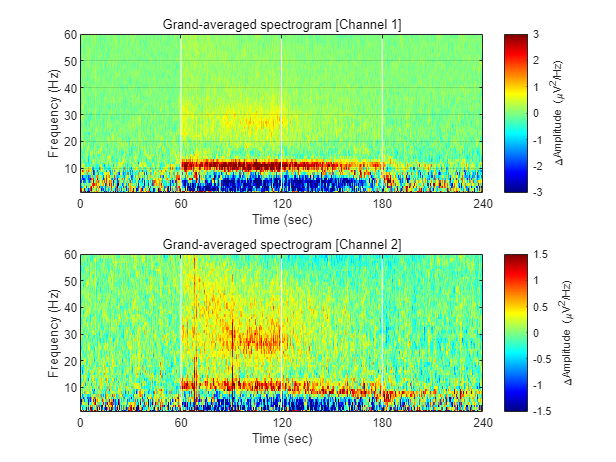

1-3) Visualizing grand-averaged spectrogram

n_mouse = 8; % number of mouse

n_sess = 16; % number of session

n_ch = 2; % number of channel

spec = single([]);

for mouse = 1:n_mouse

for sess = 1:n_sess

eeg_data_path = sprintf('%ssub-%02d/ses-%02d/eeg/',path_base, mouse, sess);

eeg_data_name = dir([eeg_data_path '*.set']);

EEG = pop_loadset('filename', eeg_data_name.name, 'filepath', eeg_data_name.folder, 'verbose', 'off');

for ch = 1:n_ch

[spec_d, spec_t, spec_f] = get_spectrogram( EEG.data(ch,:), EEG.times );

spec(:,:,ch,mouse,sess) = spec_d*1000; % Unit scaling - millivolt to microvolt

end

end

end

Now, we have spectrograms derived from all recordings (n = 8 mice x 8 sessions x 2 solitary/group conditions). Before visualizing the grand-averaged spectrogram, we will first perform baseline correction. This is done by subtracting the mean value of each frequency component during the baseline period (Stage 1, 0-60 sec).

fig = figure(1); clf

for ch = 1:2

% calculating grand-averaged spectrogram

spec_avg = mean(mean(spec(:,:,ch,:,[1:8]),5),4);

% baseline correction

stage_1_win = 1:size( spec_avg,1 )/4;

spec_baseline = repmat( mean( spec_avg(stage_1_win,:), 1 ), [size(spec_avg,1), 1]);

spec_norm = spec_avg - spec_baseline;

% single channel visualization

subplot(2,1,ch)

imagesc( spec_t, spec_f, imgaussfilt( spec_norm', 1) ); axis xy

xlabel('Time (sec)'); ylabel('Frequency (Hz)');

axis([0 240 1 60])

hold on

plot([1 1]*60*1,ylim,'w-');

plot([1 1]*60*2,ylim,'w-');

plot([1 1]*60*3,ylim,'w-');

set(gca, 'XTick', [0 60 120 180 240])

colormap('jet')

cbar=colorbar; ylabel(cbar, '\DeltaAmplitude (\muV^2/Hz)')

caxis([-1 1]*3/ch)

title(sprintf('Grand-averaged spectrogram [Channel %d]',ch))

end

Part 2) Accessing position data

2-1) Loading position data (solitary)

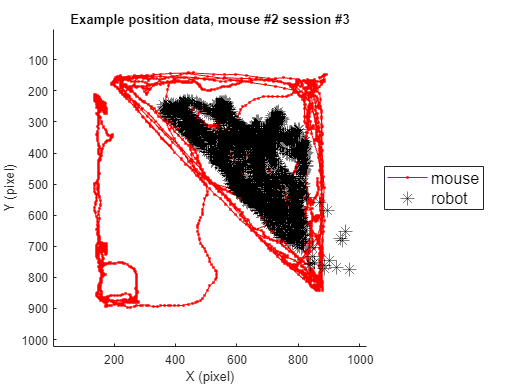

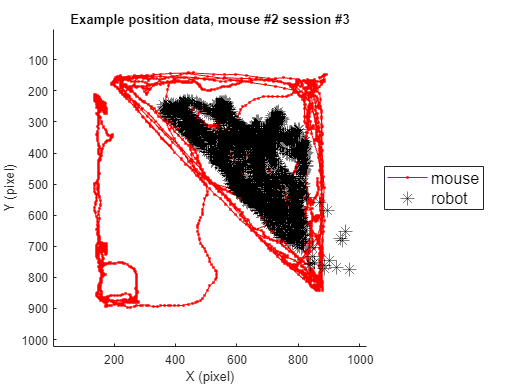

In this instance, we will go through the behavioral data extracted from a sample video. For this specific example, we use one example solitary threat session (mouse #2, session #3) to depict the trajectory of the mouse's movement in space.

path_base = './data_BIDS/';

path_to_load = [path_base 'stimuli/position/Single/'];

% Data loading

mouse = 2;

sess = 3;

pos_fname = sprintf('%sMouse-Day%d-Trial%d-Mouse%d.csv',path_to_load,day,trial,mouse);

pos = table2struct(readtable(pos_fname));

xy_mouse = clean_coordinates([[pos.x];[pos.y]]);

pos_fname = sprintf('%sRobot-Day%d-Trial%d-Mouse%d.csv',path_to_load,day,trial,mouse);

pos = table2struct(readtable(pos_fname));

xy_robot = clean_coordinates( [[pos.x];[pos.y]] );

% Visualization

figure(3); clf; hold on

plot( xy_mouse(1,:), xy_mouse(2,:), ...

'.-', 'Color', 'r');

axis([1 1024 1 1024])

plot( xy_robot(1,:), xy_robot(2,:), ...

'*', 'Color', 'k','MarkerSize',10);

set(gca, 'Box', 'off')

xlabel('X (pixel)');

ylabel('Y (pixel)');

legend({'mouse','robot'}, 'Location', 'eastoutside', 'FontSize', 12);

set(gca, 'YDir' ,'reverse');

title(sprintf('Example position data, mouse #%d session #%d',mouse, sess))

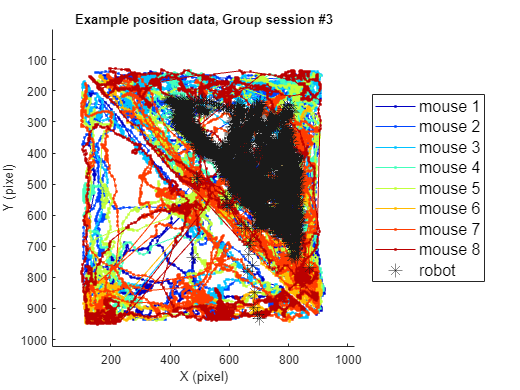

2-2) Loading position data (group)

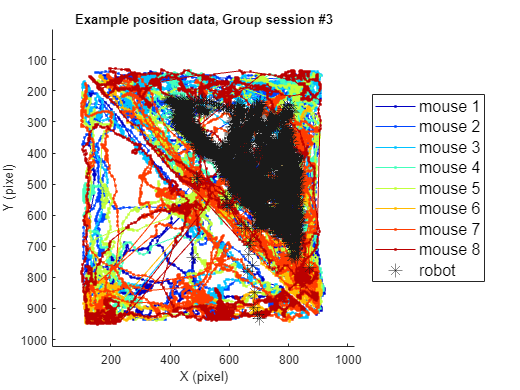

Using the same method, position data for the group threat condition can also be plotted as follows. Here, we use session #3 of the group threat condition.

path_base = './data_BIDS/';

path_to_load = [path_base 'stimuli/position/Group/'];

% Data loading

sess = 3;

xy_mouse = zeros([2,7200,8]);

xy_robot = zeros([2,7200,1]);

for mouse = 1:8

day = ceil(sess/4); trial = mod(sess,4); if ~trial,trial=4; end;

pos_fname = sprintf('%sMouse-Day%d-Trial%d-Mouse%d.csv',path_to_load,day,trial,mouse);

pos = table2struct(readtable(pos_fname));

xy_mouse(:,:,mouse) = clean_coordinates([[pos.x];[pos.y]]);

end

pos_fname = sprintf('%sRobot-Day%d-Trial%d.csv',path_to_load,day,trial);

pos = table2struct(readtable(pos_fname));

xy_robot(:,:,1) = clean_coordinates( [[pos.x];[pos.y]] );

% Visualization

figure(3); clf;

color_assign = imresize(colormap('jet'), [8, 3], 'nearest');

color_assign(9,:) = [.1 .1 .1];

plts =[]; labs = {};

for mouse = 1:8

plts(mouse)=plot( xy_mouse(1,:,mouse), xy_mouse(2,:,mouse), ...

'.-', 'Color', color_assign(mouse,:));

if mouse==1, hold on; end

labs{mouse} = sprintf('mouse %d',mouse);

end

axis([1 1024 1 1024])

plts(end+1)=plot( xy_robot(1,:), xy_robot(2,:), ...

'*', 'Color', color_assign(end,:),'MarkerSize',10);

set(gca, 'Box', 'off')

xlabel('X (pixel)');

ylabel('Y (pixel)');

labs{9} = 'robot';

legend(plts,labs, 'Location', 'eastoutside', 'FontSize', 12);

set(gca, 'YDir' ,'reverse');

title(sprintf('Example position data, Group session #%d',sess))

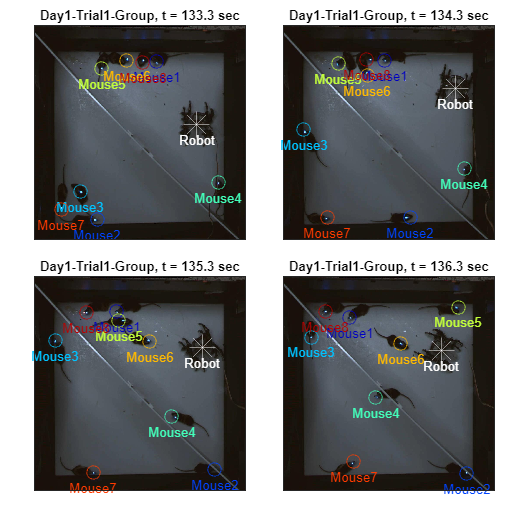

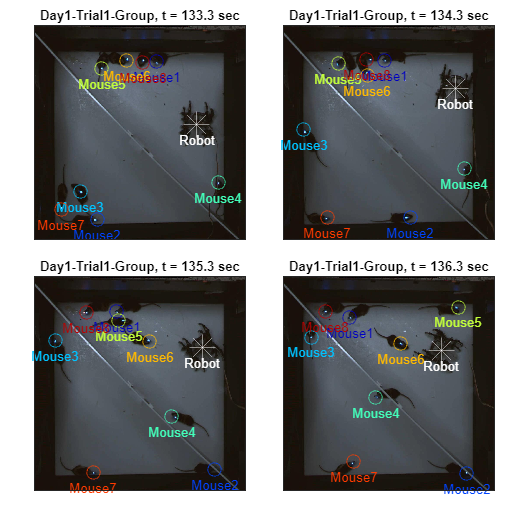

2-3) Overlay with frame

This positional data is extracted directly from the frame file (extracted from the attached video using parse_video_rgb.m), where the unit of measurement is in pixels. Additionally, position overlay on frames can be achieved using the following code. The example provided below illustrates sequential frames at one-second intervals. The resolution of the frames is (1024 x 1024), while the arena is a square of 60 x 60 cm, allowing conversion to physical units. For further details, refer to the Methods section of the original publications (Kim et al., 2020; Han et al., 2023).

% Import position data

for mouse = 1:8

day = ceil(sess/4); trial = mod(sess,4); if ~trial,trial=4; end;

pos_fname = sprintf('%sMouse-Day%d-Trial%d-Mouse%d.csv',path_to_load,day,trial,mouse);

pos = table2struct(readtable(pos_fname));

xy_mouse(:,:,mouse) = clean_coordinates([[pos.x];[pos.y]]);

end

pos_fname = sprintf('%sRobot-Day%d-Trial%d.csv',path_to_load,day,trial);

pos = table2struct(readtable(pos_fname));

xy_robot(:,:,1) = clean_coordinates( [[pos.x];[pos.y]] );

% Overlay frame image + position data

frames_path = './data_BIDS/stimuli/video_parsed/';

if ~isdir(frames_path), parse_video_rgb(); end

sess = 1;

vidname = sprintf('Day%d-Trial%d-Group',ceil(sess/4), mod(sess,4));

% Visualization

color_assign(9,:) = [1 1 1];

figure(4); set(gcf, 'Position',[0 0 1000 1000]);

tiledlayout('flow', 'TileSpacing', 'compact', 'Padding','compact')

for frameIdx = [4000, 4030, 4060, 4090]

% Import & draw frame

fname_jpg = sprintf(['%sGroup/%s/frame-%06d-%s.jpg'],frames_path,vidname,frameIdx,vidname);

frame = imread( fname_jpg );

nexttile;

imagesc(frame);

hold on

% Mouse coordinates overlay

for mouse = 1:8

plot( xy_mouse(1,frameIdx,mouse),xy_mouse(2,frameIdx,mouse), 'o', ...

'MarkerSize', 10, 'Color', color_assign(mouse,:))

text(xy_mouse(1,frameIdx,mouse),xy_mouse(2,frameIdx,mouse)+70,sprintf('Mouse%d',mouse),...

'Color', color_assign(mouse,:), 'FontSize', 10, 'HorizontalAlignment','center');

end

% Spider coordinates overlay

plot( xy_robot(1,frameIdx),xy_robot(2,frameIdx), '*', ...

'MarkerSize', 20, 'Color', color_assign(end,:))

text(xy_robot(1,frameIdx),xy_robot(2,frameIdx)+70,'Robot',...

'Color', color_assign(end,:), 'FontSize', 10, 'HorizontalAlignment','center');

title(sprintf('%s, t = %.1f sec', vidname, frameIdx/30));

ylim([24 1000]); xlim([24 1000]); set(gca, 'XTick', [], 'YTick', [])

end