README.md 11 KB

Zooniverse campaign

Summary

The present repository showcases the organization of a Zooniverse campaign using ChildProject and DataLad.

This campaign requires citizens to listen to 500 ms audio clips and to perform the following tasks:

- Decide whether they hear speech from either a Baby, a Child, an Adolescent, an Adult, or no speech.

- Guess the gender of the speaker in case of non-baby speech.

- Classify the type of sound among four categories (Canonical, Non-Canonical, Laughing, Crying).

Workflow

- We used DataLad to manage this campaign.

- The primary dataset (containing the audio and the metadata) was included in this repository as a subdataset. It was structured according to ChildProject's standards.

- ChildProject was used to generate the samples, to upload the audio chunks to zooniverse, and to retrieve the classifications.

This repository contains all the scripts that we used to implement this workflow. You are welcome to re-use this code and adapt it to your needs.

Repository structure

annotationscontains annotations built from the classifications retrieved from Zooniverse.classificationscontains the classifications retrieved from Zooniverse.samplescontains the samples that were selected as well as the chunks generated from them.vandam-datais a subdataset containing VanDam Daylong corpus, structed according to ChildProject's standards.

Preparing samples

Sampling

Sampling consists in selecting which portions of the recordings should be annotated by humans. It can be done through using the samplers provided in the ChildProject package.

Here, we sample 50 vocalizations per recording among all those detected and attributed to the key child (CHI) or a female adult (FEM) by the Voice Type Classifier:

# download VTC annotations

datalad get vandam-data/annotations/vtc/converted

# sample random CHI and FEM vocalizations from these annotations

child-project sampler vandam-data samples/chi_fem/ random-vocalizations \

--annotation-set vtc \

--target-speaker-type CHI FEM \

--sample-size 50 \

--by recording_filename

The sampler produces a CSV dataframe as samples/chi_fem/segments_YYYYMMDD_HHMMSS.csv, e.g. samples/chi_fem/segments_20210716_184443.csv.

Preparing the chunks for Zooniverse

After the samples have been generated, they have to be extracted from the audio and uploaded to Zooniverse, which can be done with ChildProject's extract-chunks function.

However, these samples may contain private information about the participants. Therefore, they cannot be shared as is on a public crowd-sourcing platform. We therefore configure extract-chunks to split these samples into 500ms chunks, which will be classified in random order, thus preventing the recovery of sensitive information by the contributors:

datalad get vandam-data/recordings/converted/standard

child-project zooniverse extract-chunks vandam-data \

--keyword chi_fem \

--chunks-length 500 \

--segments samples/chi_fem/segments_20210716_184443.csv \

--destination samples/chi_fem/chunks \

--profile standard

This will extract the audio chunks into samples/chi_fem/chunks/chunks/ and write a metadata file into samples/chi_fem/chunks (in our case, as samples/chi_fem/chunks/chunks_20210716_191944.csv).

See ChildProject's documentation for more information about the Zooniverse pipeline.

Uploading audio chunks to Zooniverse

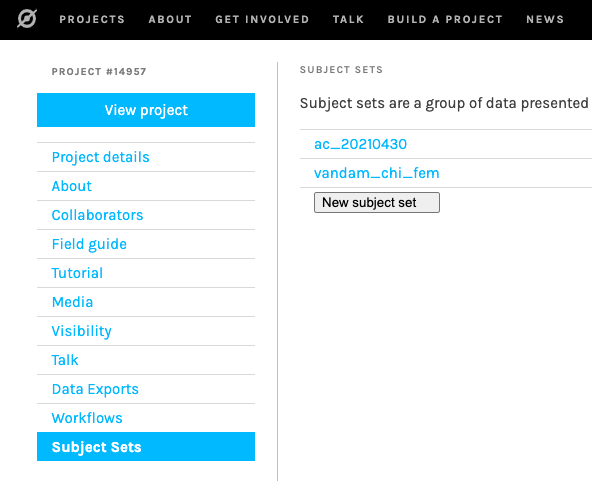

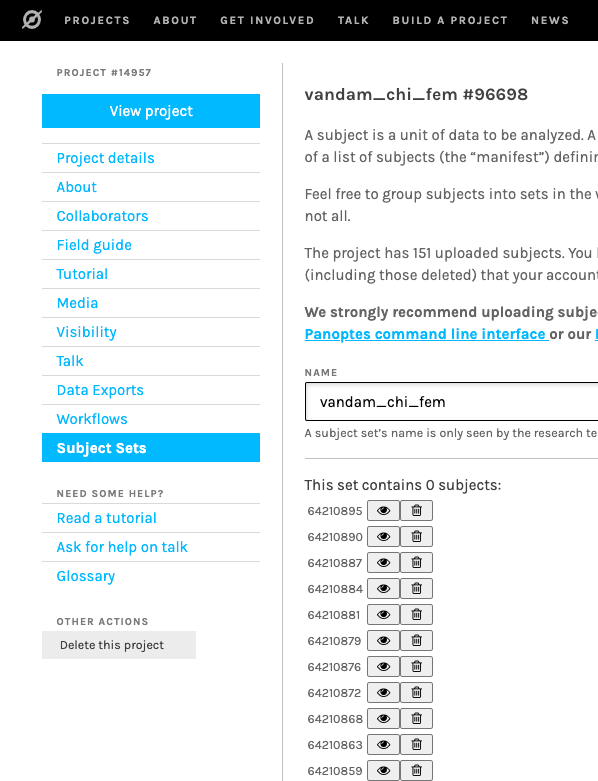

Once the chunks have been extracted, the next step is to upload them to Zooniverse. Note that due to quotas, it is recommended to upload only a few at time (e.g. 1000 per day).

You will need to provide the numerical id of your Zooniverse project; you will also need to set Zooniverse credentials as environment variables:

export ZOONIVERSE_LOGIN=""

export ZOONIVERSE_PWD=""

export PROJECT_ID=14957

child-project zooniverse upload-chunks \

--chunks samples/chi_fem/chunks/chunks_20210716_191944.csv \

--project-id $PROJECT_ID \

--set-name vandam_chi_fem \

--amount 1000

This will display a message for each chunk:

...

uploading chunk BN32_010007.mp3 (25153080,25153580)

uploading chunk BN32_010007.mp3 (45016146,45016646)

uploading chunk BN32_010007.mp3 (46794141,46794641)

uploading chunk BN32_010007.mp3 (14107752,14108252)

uploading chunk BN32_010007.mp3 (35709983,35710483)

uploading chunk BN32_010007.mp3 (45433933,45434433)

uploading chunk BN32_010007.mp3 (35711483,35711983)

uploading chunk BN32_010007.mp3 (38737938,38738438)

uploading chunk BN32_010007.mp3 (24586156,24586656)

uploading chunk BN32_010007.mp3 (15556956,15557456)

uploading chunk BN32_010007.mp3 (28439601,28440101)

uploading chunk BN32_010007.mp3 (27317629,27318129)

uploading chunk BN32_010007.mp3 (38391252,38391752)

...

The subject set and its subjects (i.e. the chunks) now appears in the project:

Retrieving classifications

The classifications performed by citizens on Zooniverse for this project can be retrieved with ChildProject's retrieve-classifications command:

child-project zooniverse retrieve-classifications \

--destination classifications/classifications.csv \

--project-id $PROJECT_ID

$ head classifications/classifications.csv

id,user_id,subject_id,task_id,answer_id,workflow_id,answer

335442480,2202359,61513545,T1,4,17576,Junk

335442502,2202359,61513542,T1,4,17576,Junk

335442523,2202359,61513540,T1,4,17576,Junk

335442614,2202359,61513546,T1,4,17576,Junk

335442630,2202359,61513544,T1,4,17576,Junk

335443247,2221541,61513546,T1,4,17576,Junk

335443443,2202359,61513544,T1,4,17576,Junk

335450309,1078249,61513540,T1,4,17576,Junk

335457868,2202359,61514279,T1,4,17576,Junk

Two special notes about the output of retrieve-classifications:

- It contains no information related to the metadata of the input dataset, which is the desired behavior (Zooniverse should not store any data that might compromise the privacy of the participants)

- It contains all the classifications for this project, including those for data outside of the current campaign.

Matching classifications back to the metadata

Classifications can be matched to the original metadata (the recording from which the clips were extracted, the timestamps of the clips, etc.) with classifications/match.py:

$ python classifications/match.py --help

usage: match.py [-h] --chunks CHUNKS [CHUNKS ...] classifications output

match classifications with extracted chunks

positional arguments:

classifications classifications file

output output

optional arguments:

-h, --help show this help message and exit

--chunks CHUNKS [CHUNKS ...]

list of chunks

python classifications/match.py \

classifications/classifications.csv \

classifications/matched.csv \

--chunks samples/chi_fem/chunks/chunks*.csv

| id | user_id | subject_id | task_id | answer_id | workflow_id | answer | index | recording_filename | onset | offset | segment_onset | segment_offset | wav | mp3 | date_extracted | uploaded | project_id | subject_set | zooniverse_id | keyword |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 346474371 | 2202359.0 | 64210633 | T1 | 4 | 17576 | Junk | 38 | BN32_010007.mp3 | 24587156 | 24587656 | 24584902 | 24588410 | BN32_010007_24587156_24587656.wav | BN32_010007_24587156_24587656.mp3 | 2021-07-16 19:19:44 | True | 14957 | vandam_chi_fem | 64210633 | chi_fem |

| 346474611 | 2202359.0 | 64210607 | T1 | 4 | 17576 | Junk | 30 | BN32_010007.mp3 | 14108752 | 14109252 | 14107993 | 14109011 | BN32_010007_14108752_14109252.wav | BN32_010007_14108752_14109252.mp3 | 2021-07-16 19:19:44 | True | 14957 | vandam_chi_fem | 64210607 | chi_fem |

| 346474648 | 2202359.0 | 64210647 | T1 | 4 | 17576 | Junk | 43 | BN32_010007.mp3 | 4713603 | 4714103 | 4713263 | 4714944 | BN32_010007_4713603_4714103.wav | BN32_010007_4713603_4714103.mp3 | 2021-07-16 19:19:44 | True | 14957 | vandam_chi_fem | 64210647 | chi_fem |

| 346474683 | 2202359.0 | 64210890 | T1 | 4 | 17576 | Junk | 125 | BN32_010007.mp3 | 4713103 | 4713603 | 4713263 | 4714944 | BN32_010007_4713103_4713603.wav | BN32_010007_4713103_4713603.mp3 | 2021-07-16 19:19:44 | True | 14957 | vandam_chi_fem | 64210890 | chi_fem |

| 346474902 | 2202359.0 | 64210872 | T1 | 4 | 17576 | Junk | 119 | BN32_010007.mp3 | 39329854 | 39330354 | 39328511 | 39331697 | BN32_010007_39329854_39330354.wav | BN32_010007_39329854_39330354.mp3 | 2021-07-16 19:19:44 | True | 14957 | vandam_chi_fem | 64210872 | chi_fem |

| 346475101 | 2202359.0 | 64210613 | T1 | 4 | 17576 | Junk | 32 | BN32_010007.mp3 | 4149238 | 4149738 | 4148967 | 4149510 | BN32_010007_4149238_4149738.wav | BN32_010007_4149238_4149738.mp3 | 2021-07-16 19:19:44 | True | 14957 | vandam_chi_fem | 64210613 | chi_fem |

| 346475237 | 2202359.0 | 64210774 | T1 | 4 | 17576 | Junk | 86 | BN32_010007.mp3 | 17157819 | 17158319 | 17157511 | 17158127 | BN32_010007_17157819_17158319.wav | BN32_010007_17157819_17158319.mp3 | 2021-07-16 19:19:44 | True | 14957 | vandam_chi_fem | 64210774 | chi_fem |

| 346474475 | 2202359.0 | 64210728 | T1 | 0 | 17576 | Baby | 70 | BN32_010007.mp3 | 37978055 | 37978555 | 37978100 | 37979011 | BN32_010007_37978055_37978555.wav | BN32_010007_37978055_37978555.mp3 | 2021-07-16 19:19:44 | True | 14957 | vandam_chi_fem | 64210728 | chi_fem |

| 346474475 | 2202359.0 | 64210728 | T3 | 1 | 17576 | Non-Canonical | 70 | BN32_010007.mp3 | 37978055 | 37978555 | 37978100 | 37979011 | BN32_010007_37978055_37978555.wav | BN32_010007_37978055_37978555.mp3 | 2021-07-16 19:19:44 | True | 14957 | vandam_chi_fem | 64210728 | chi_fem |