Nearest Neighbour Search using binary Neural Networks and Product Quantization

We face the problem of Nearest Neighbours Search (NNS) in terms of Euclidean distance, Hamming distance or other distance metric. In order to accelerate the search for the nearest neighbour in large collection datasets, many methods rely on the coarse-fine approach. In this work we propose to combine Product Quantization (PQ) and Willshaw Neural Networks (WNN), i.e. binary neural associative memories.

This work was published in 2016: International Joint Conference on Neural Networks (IJCNN), IEEE.

To be cited as:

Ferro, D., Gripon, V., & Jiang, X. (2016, July). Nearest neighbour search using binary neural networks. In 2016 International Joint Conference on Neural Networks (IJCNN) (pp. 5106-5112). IEEE. 10.1109/IJCNN.2016.7727873

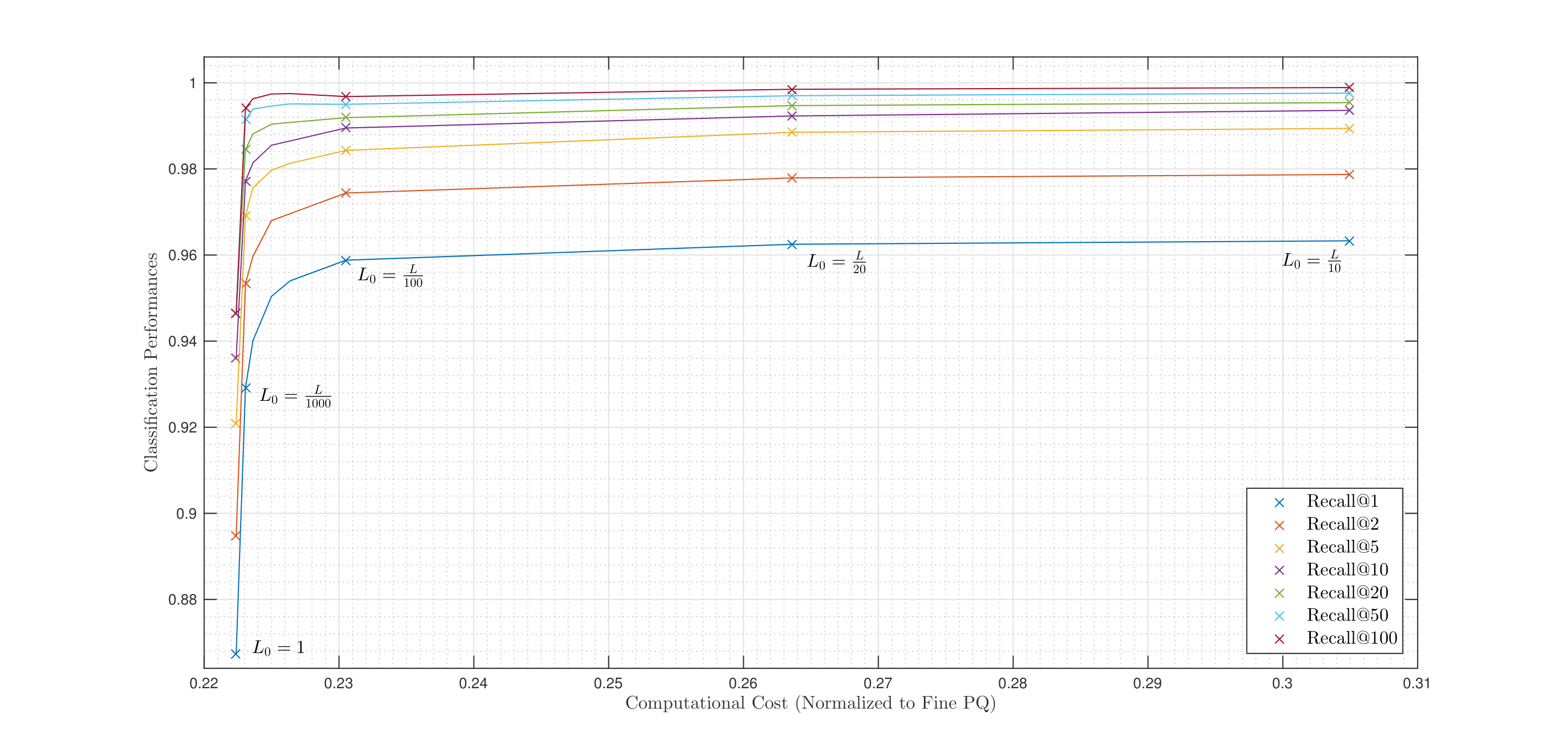

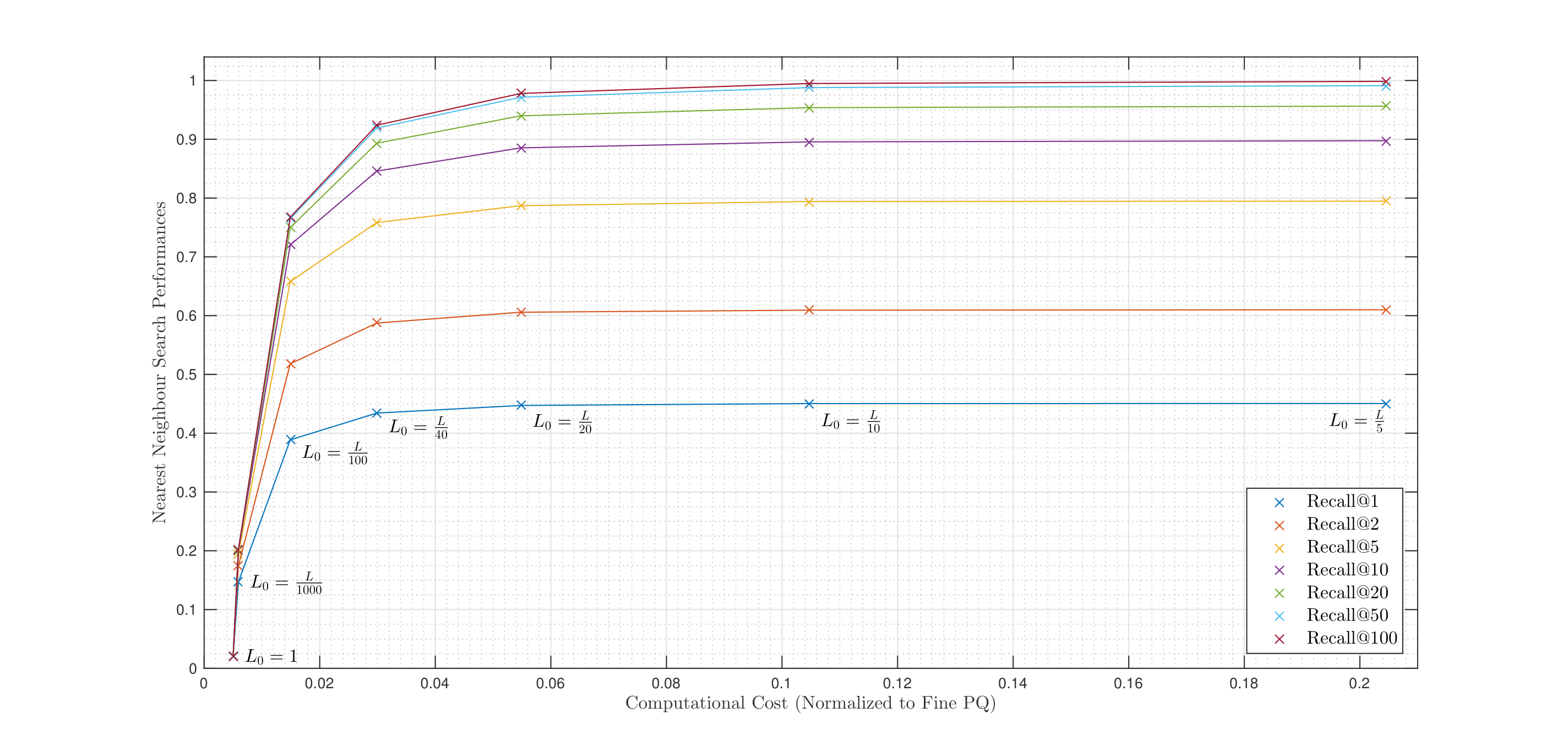

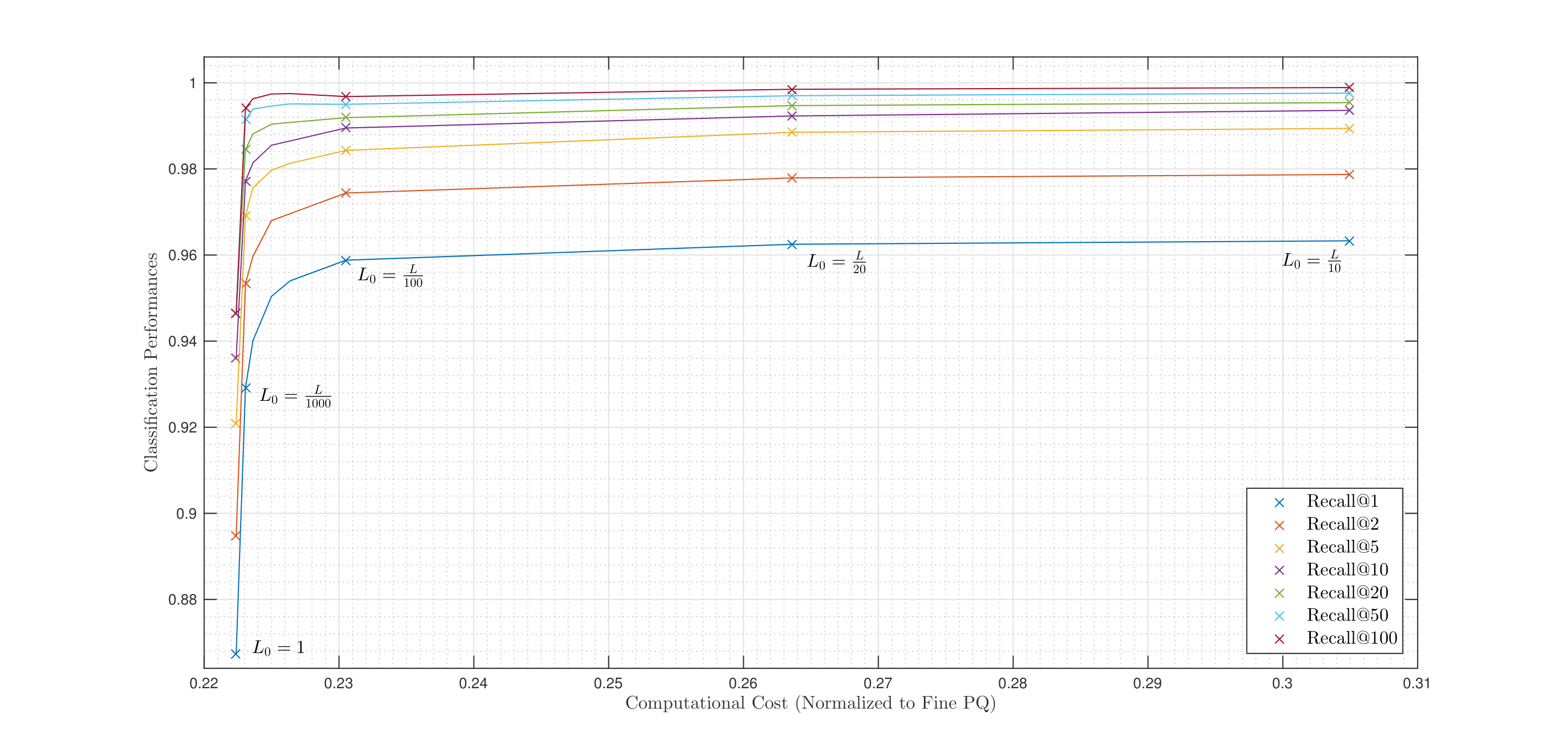

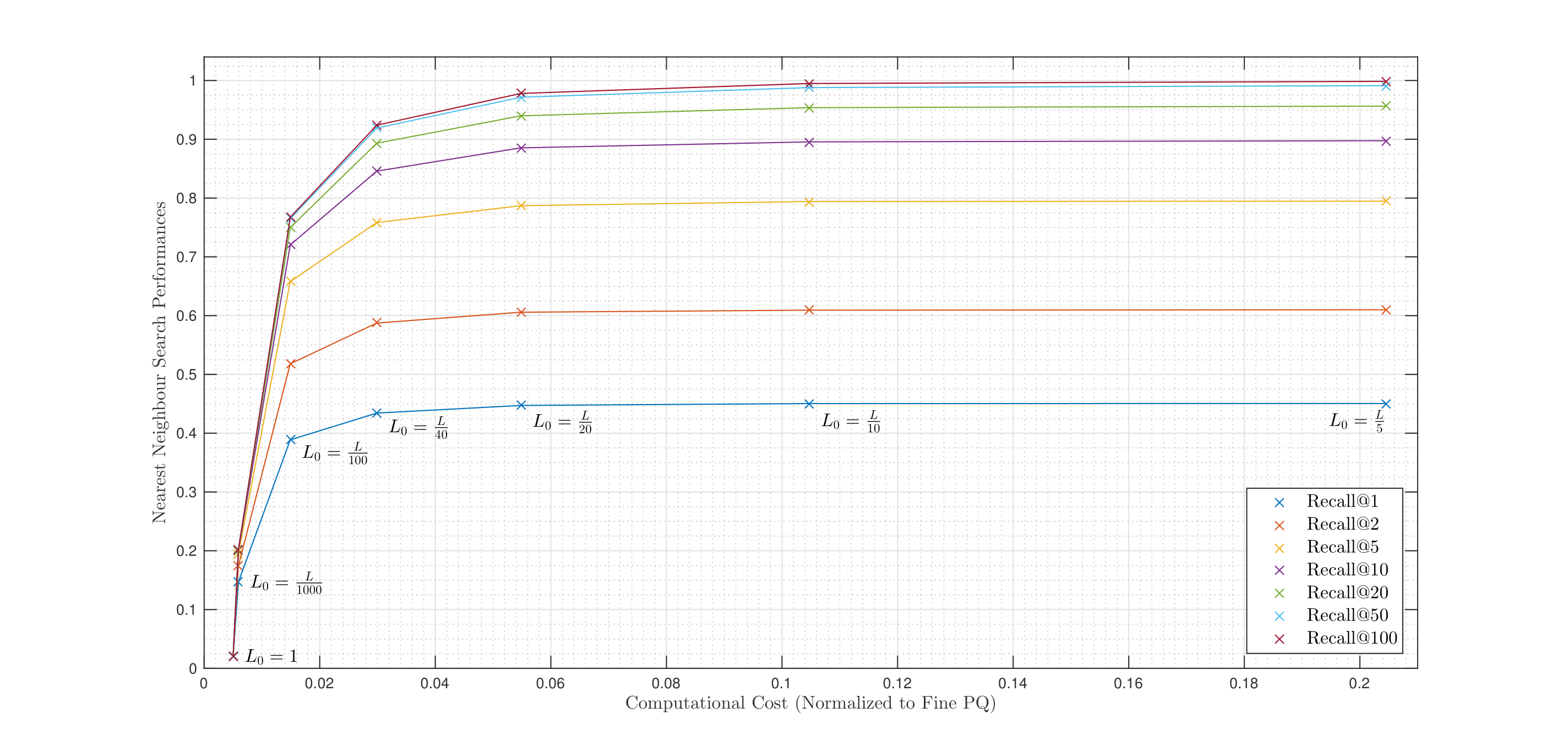

We show the main results, i.e., performances vs computational cost for two main applications:

1) Classification of handwritten digits over MNIST (NIST, USA) dataset (60k train, 10k test).

2) Euclidean metric NNS of image SIFT descriptors over TEXMEX (IRISA, FR) dataset (1M train, 10k test).

The project was developed between April and September 2015 during my internship at Télécom Bretagne, Brest (FR), under the supervision of Prof. Claude Berrou and Vincent Gripon.