Datasets for Dominiak et al., 2019

Raw and some derived datasets supplementary to:

Dominiak SE, Nashaat MA, Sehara K, Oraby H, Larkum ME, Sachdev RNS (2019) Whisking asymmetry signals motor preparation and the behavioral state of mice. J Neurosci, in press.

Contents

How datasets were generated

How this dataset can be read

Repository information

How datasets were generated

Setup

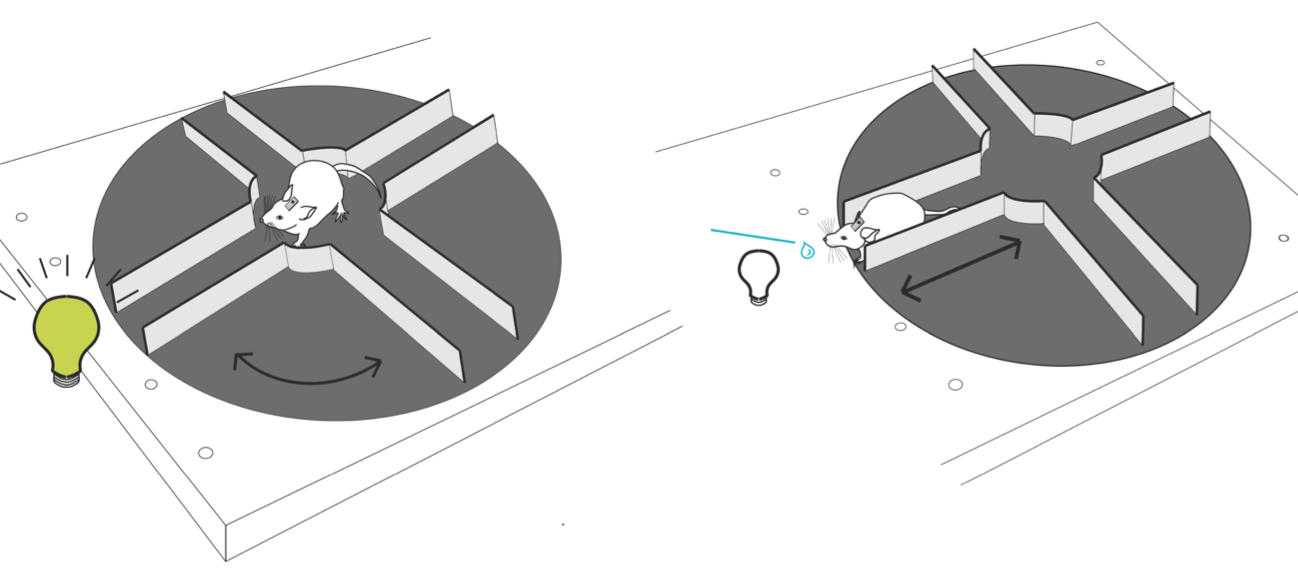

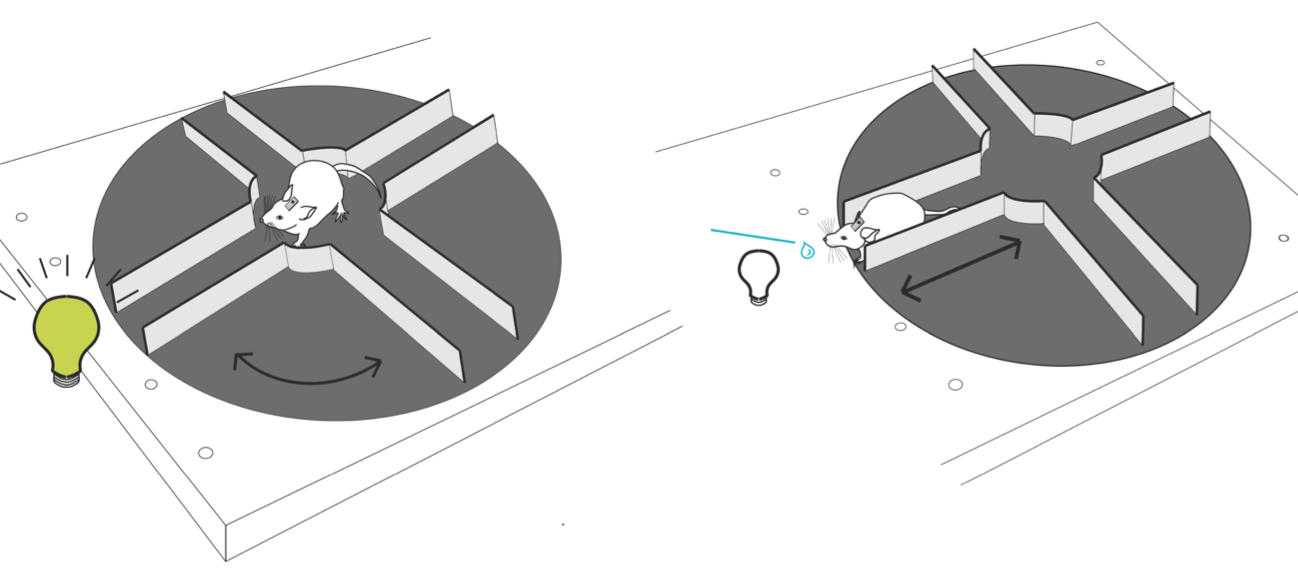

We used the Airtrack platform (Nashaat et al., 2016) (above) to engage a head-fixed mouse in a freely-behaving environment. Pumped air flow lets the plus-maze environment float on the table so that the head-fixed mouse (only the head-post holder is shown at the center of the plus maze) can easily propel the environment.

The position of the floating plus maze was monitored using an Arduno microcontroller, which controlled of the behavioral task at the same time. For more details of the setup, please refer to https://www.neuro-airtrack.com .

Task

The water-restricted, head-fixed mouse performed a simple plus-maze task:

- Each trial started when the mouse was at the end of a lane (not shown in the figure above).

- The mouse had to move backward (i.e. push the plus maze forward) to go to the central arena, and propel the maze to find out the correct, rewarding lane (Left image in the figure):

- There was a LED-based visual cue in front of the animal that signaled whether or not the lane in front of the animal was the correct one.

- If the lane in front of the animal was the correct one, the LED was off.

- If the lane in front of the animal was not the correct one, the LED was on.

- When the mouse locomoted to the end of the correct lane, the mouse was allowed an access to the lick port for short (~2 s) amount of time (Right image in the figure):

- The lick port was attached to a linear stage (i.e. the apparatus on the red mechanical arm in the setup figure), and normally was in the protracted position to keep the mouse out of access.

- The microcontroller for Airtrack detected the position of the animal, and moved the linear stage to push the lick port right in front of the animal.

Experimental conditions

Acquisition and basic analysis

A high-speed camera acquired whisking behavior of the animal.

Raw videos

The raw videos may be found in the videos domain of the raw dataset.

Behavioral states

For some videos of the dataset, the behavioral states of the animal were manually annotated:

- AtEnd: the animal was standing at the end of a lane, without apparently doing anything.

- Midway: the animal was standing in the middle of a lane.

- Backward: the animal was moving backward along a lane.

- Left/Right: the animal was turning left or right (from the animal's point of view) in the central arena.

- Forward: the animal was moving forward along a lane.

- Expect: the animal was standing at the end of the correct, rewarding lane, waiting for the lick port to come forward.

- Lick: the lick port was in front of the animal.

The data was stored as CSV files (the states domain of the raw dataset).

Note that the frame numbers in the videos are indicated as one-starting numbers, i.e. the first frame of the video was referred to as the frame 1.

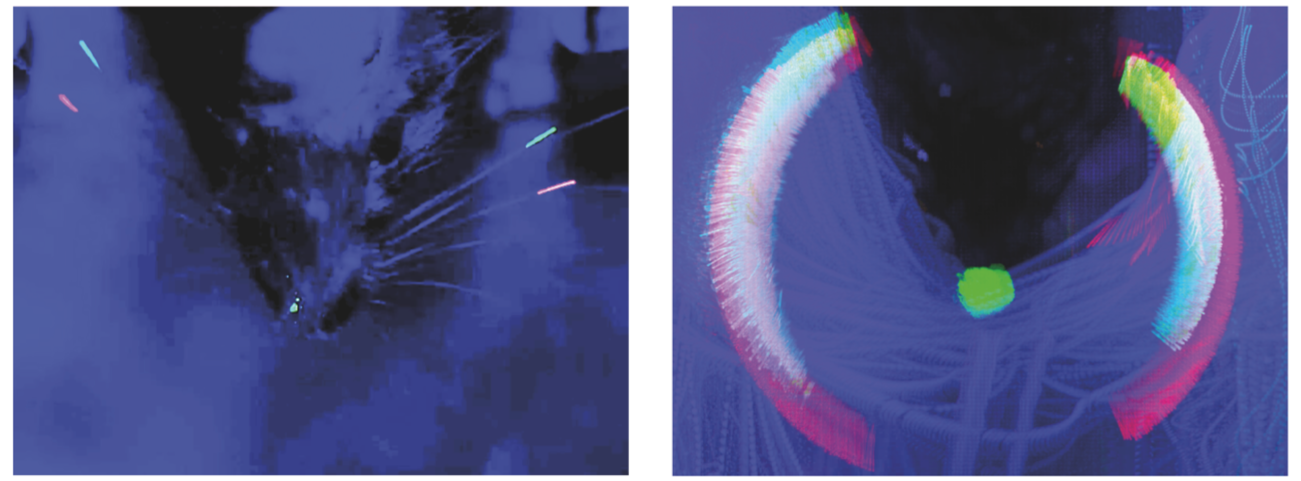

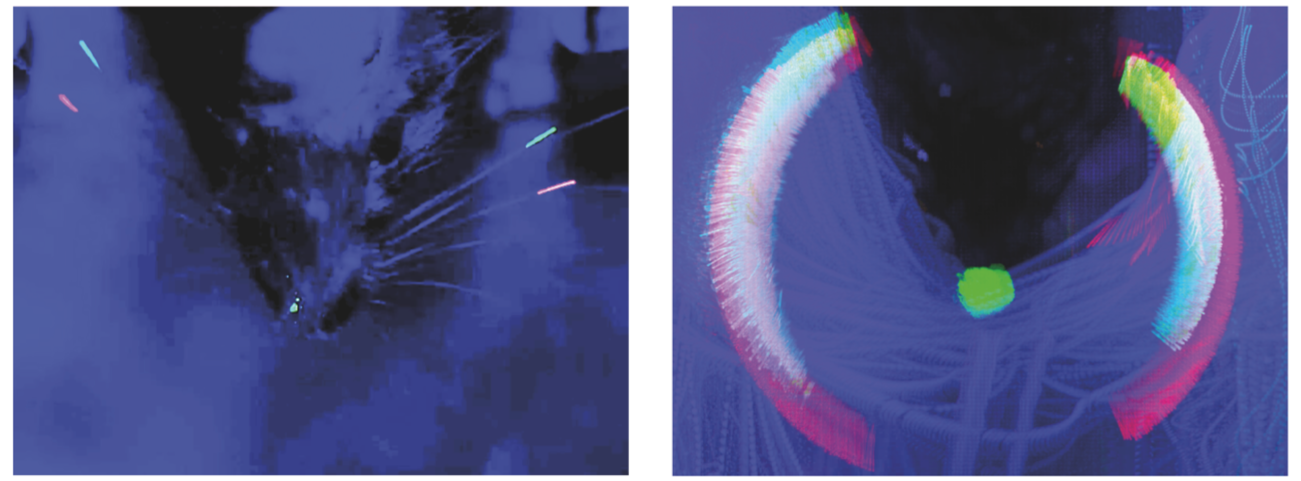

Body-part tracking using UV paint

To facilitate tracking procedures, a small amount of UV paint ("UV glow", to be precise) was applied to the body parts of interest. Some mice only received paints on their whiskers, but the others have their nose painted as well (Left in the figure above).

Tracking procedures were performed using either of the following programs (both of them engage the same tracking algorithm):

In brief, the procedures were as follows:

- A maximal projection images for each video was computed (Right in the figure above; also see the

projection domain of the tracking dataset)

- The ROIs for each body part of interest were manually determined (see the

ROI domain of the tracking dataset).

- Within each ROI in every frame, pixels that has the specified hue (i.e. color-balance) values were collected, and the luminance-based weighted average of their positions were defined as the tracked position of the body part.

- The tracked positions were stored as a CSV file (see the

tracked domain of the tracking dataset).

How this dataset can be read

We prepared the helper.py python module for easing the dataset reading.

Please check the walkthrough.ipynb Jupyter notebook to see how to use it to read the dataset.

General information about the Jupyter program can be found here.

Repository information

Authors

Please refer to REPOSITORY.json.

License

Creative Commons CC0 1.0 Universal