Speaker confusion model

The Voice Type Classifier (VTC) is a speech detection algorithm that classifies each detected speech segment into one of four classes: Female adults, Male adults, the Key child wearing the recording device, and Other surrounding children. This allows to measure the input children are exposed to as they are acquiring language, as well as their own speech production.

However, the VTC is not perfectly accurate, and the speaker may be misidentified (or, speech may be left undetected).

For instance, the key child is occasionally confused with other children. Not only such confusions have consequences on the amount of speech measured within each of these four categories, but they also tend to generate spurious correlations

between the amount of speech produced by each kind of speaker. For instance, more actual "Key child" (CHI) speech will systematically and spuriously lead to more "Other children" (OCH) detected speech due to speaker misidentification, which in turn will appear as if there was a true correlation between CHI and OCH amounts' of speech.

The present bayesian models capture the errors of the VTC (false negatives and confusion between speakers) in order to assess the impact of such errors on correlational analyses.

Such models, in principle, also allow to recover the unbiased values of the parameters of interest (e.g., the true correlation between two speakers), by translating bias into larger posteriors on the parameters.

The mathematical description of the models can be found in docs/models.pdf.

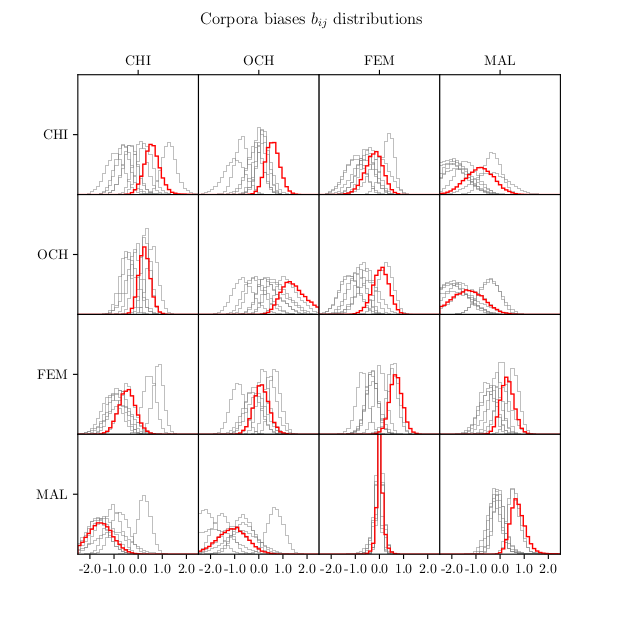

The graphs below show how large are the correlation and regression coefficients that we expect while assessing the correlations between the child output and the input from different speakers, purely due to algorithmic errors.

Installation

The installation of this repository requires DataLad (apt install datalad on Linux; brew install datalad on MacOS; read more, including instructions for windows, here)

Once DataLad is installed, the repository can be installed:

datalad install -r git@gin.g-node.org:/LAAC-LSCP/speaker-confusion-model.git

This requires access to the corpora that are used to train the model.

- Install necessary packages

pip install -r requirements.txt

Run the models

Command-Line Arguments

The models can be found in code/models. Running the model simultaneously performs the two following tasks:

- Deriving the confusion rates and their posterior distribution

- Generating null-hypothesis samples (i.e. assuming no true correlation between speakers' amounts of speech) simulating a 40 children corpora with 8 recorded hours each.

There are two models:

code/models/simple.py: This model neglects population-level (i.e corpus level) biases.code/models/corpus_bias.py: This model does account for potential corpus-level biases.

Models can be run from the command line:

$ python code/models/simple.py --help

usage: main.py [-h] [--group {corpus,child}] [--chains CHAINS] [--samples SAMPLES] [--validation VALIDATION] [--output OUTPUT]

main model described throughout the notes.

optional arguments:

-h, --help show this help message and exit

--group {corpus,child}

--chains CHAINS

--samples SAMPLES

--validation VALIDATION

--output OUTPUT

The --group parameter controls the primary level of the hierarchical model. The model indeed assumes that confusion rates (i.e. confusion probabilities) vary across corpora (corpus) or children (child).

The --chains parameter sets the amount of MCMC chains, and --samples controls the amount of MCMC samples, warmup excluded.

The --validation parameter sets the amount of annotation clips used for validation rather than training. Set it to 0 in order to use as much data for training as possible.

The --output parameter controls the output destination. Training data will be saved to output/samples/data_{output}.pickle and the MCMC samples are saved as output/samples/fit_{output}.parquet

Confusion probabilities

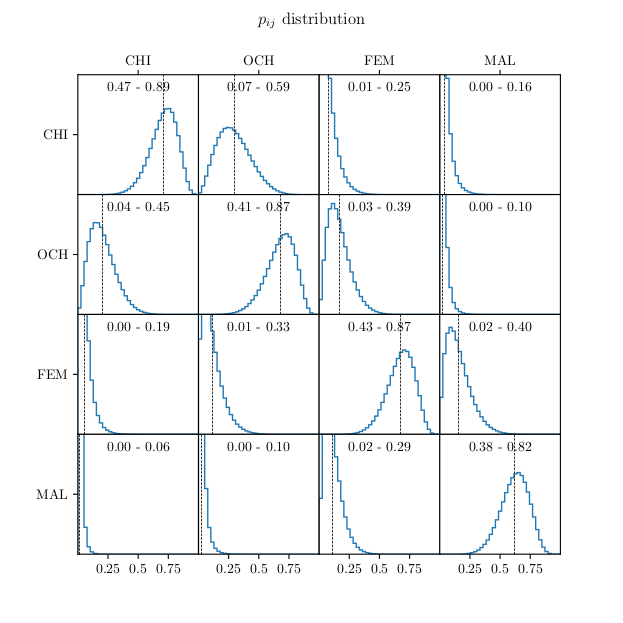

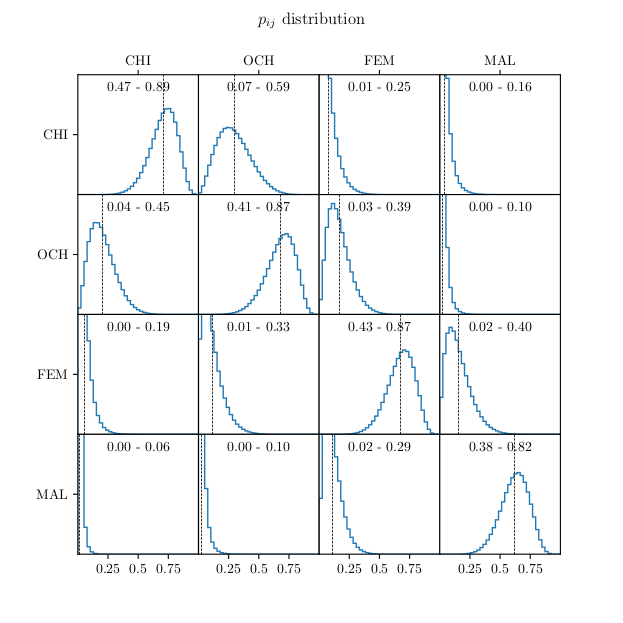

The marginal posterior distribution of the confusion matrix is shown below (model with corpus bias, as estimated for the Vanuatu dataset):

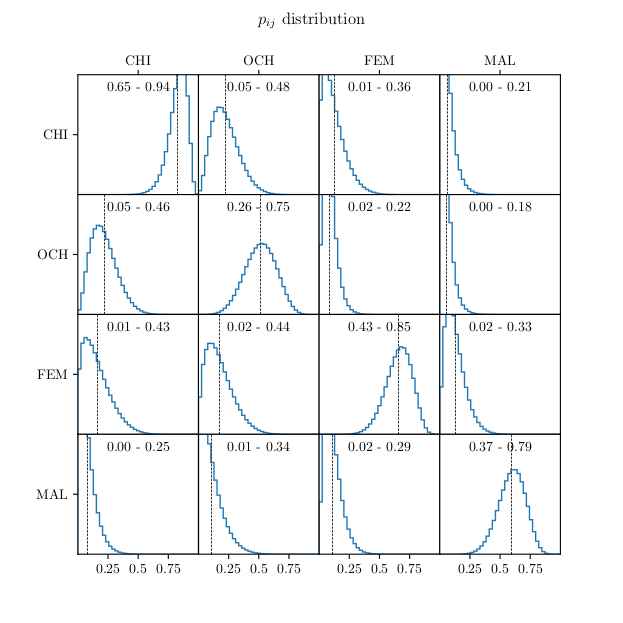

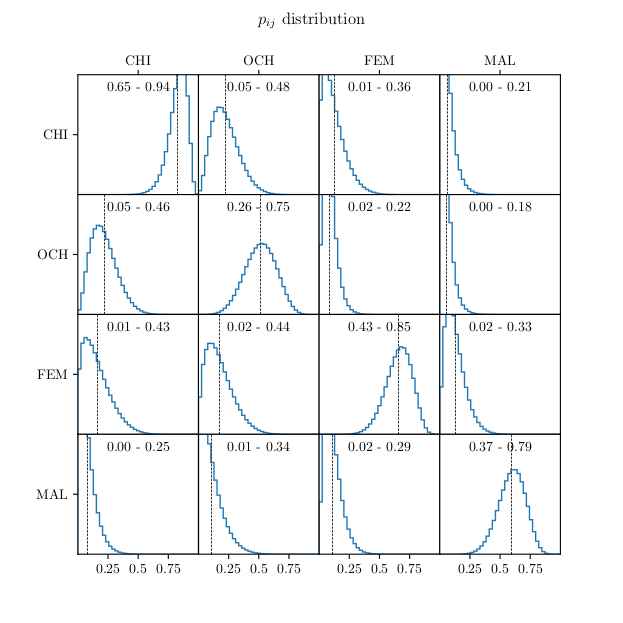

The marginal posterior distribution of the confusion matrix is shown below (model with corpus bias, as estimated for the Vandam dataset, which is a subset of Cougar):

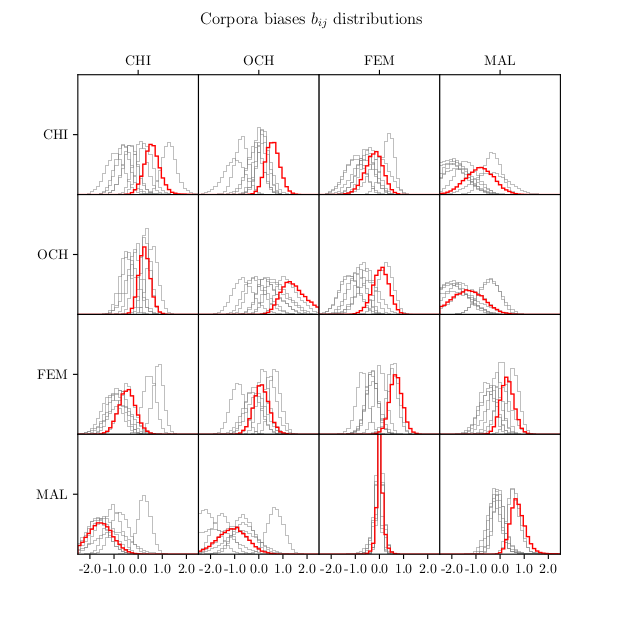

Corpus-level bias

The posterior distribution for the bias parameter of each corpus from the training set is shown below.

The distributions for "Vanuatu" are shown in red.

Speech distribution

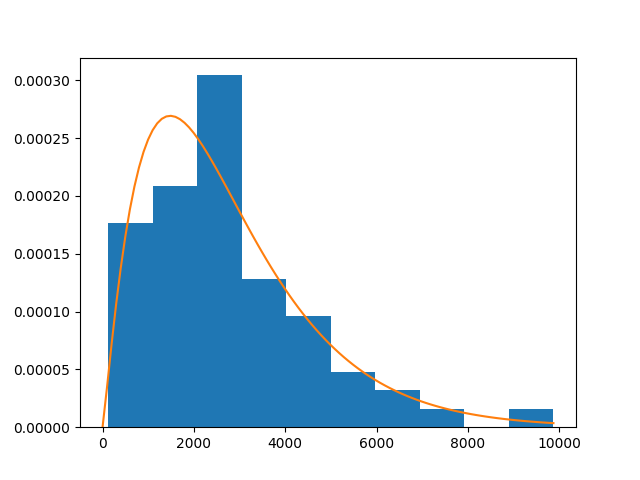

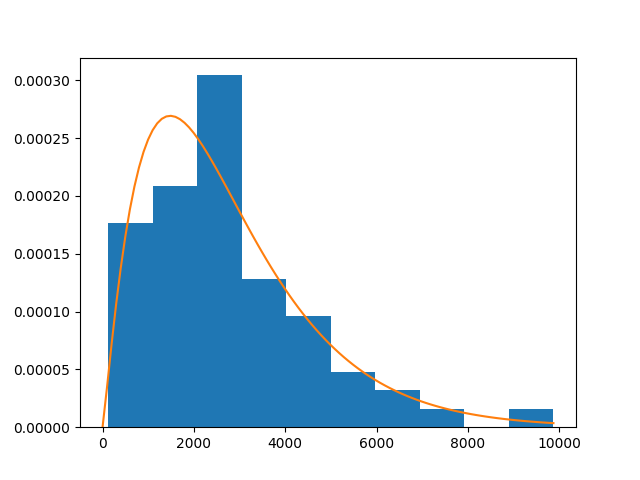

Speech distributions used to generate the simulated "null-hypothesis" corpora are fitted against the training data using Gamma distributions. The simplest models rely on a MLE fit of a global distribution based on all corpora, using the code available in code/models/speech_distribution.

The match between the training data and the Gamma parametrization can be observed in various plots in output. See below for the Key child (vocalizations for 9 hours of data from 9am to 6pm):

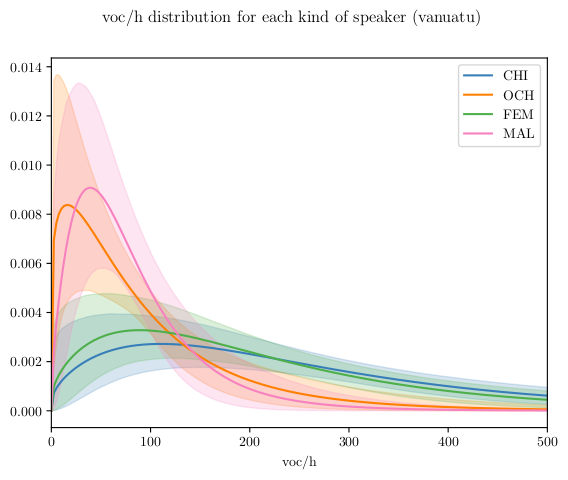

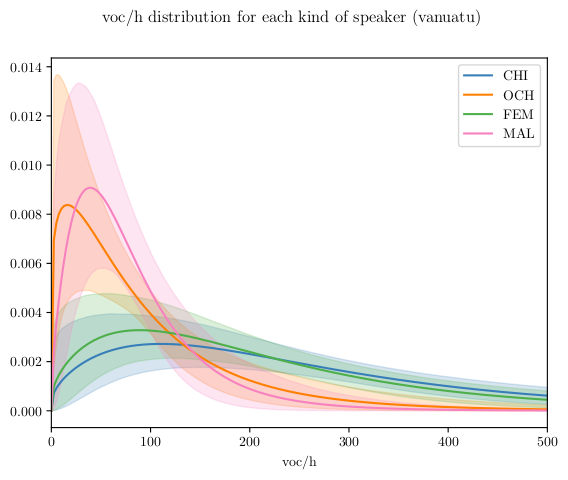

We also provide a model with a fully bayesian fit of the distributions of the true amount of speech among speakers. This allows to derive corpus-level distributions while capturing the uncertainty due to the limited amount of "ground truth" annotations. Here are, for instance, the posterior speech rate distributions (in voc/h) derived for Vanuatu: